#interactive training software

Explore tagged Tumblr posts

Text

The Benefits of Digital REALITY Schooling

Virtual reality (VR) technological innovation is transforming just how we solution education in different industries.

With its immersive, interactive, and individualized nature, VR training features many Added benefits in comparison to conventional training methods—participating staff, and providing businesses a powerful new schooling modality.

In this particular site, we will examine the advantages of VR teaching and go over its apps.

Benefits of VR Instruction included On this website write-up:

Safe and sound and Controlled Learning Environment VR Training is Charge-Powerful Psychological Realism Greater Education Engagement and Information Retention VR Schooling Can help With Recruiting and Employee Retention Flexible and Scalable Deployment Harmless and Controlled Discovering Setting Certainly one of the key benefits of VR training is it results in a safe and controlled Understanding ecosystem for learners.

VR instruction transports learners into a virtual setting wherever they are free to create errors with out genuine world penalties. You’ll normally hear individuals refer to VR schooling being a “Protected destination to fall short.” That’s because in virtual truth Understanding simulations personnel are totally free to exercise expertise enhancement and find out the result in and influence interactions of their steps, but with no suffering any real world penalties. This kind of scenario-dependent learning assists learners make that means of their instruction encounters, and to become much more knowledgeable about navigating substantial stakes conditions—familiarity that translates to raised overall performance in the actual earth.

Such as: Business leaders can exercise essential communications in VR just before facing the true factor with peers or immediate studies Customer service Reps can practice simulated purchaser conversations previous to talking to genuine consumers in discussions exactly where the stakes are superior Product sales staff members can follow shopper pitches Healthcare specialists can exercise speaking with Digital individuals symbolizing people just before experiencing major discussions with serious patients These are generally only a few examples of scenarios the place training in virtual truth presents gurus threat-cost-free follow repetitions just before carrying out their Employment in the real environment.

Check more info. here: virtual training platforms

#interactive training software#virtual reality training solutions#virtual training#virtual training platforms#leadership skill development#skill development program

0 notes

Text

Does the Age Tech take into account the fact that the arrivals are baby boomers who know what tech and AI are?

The Age Tech is evolving as baby boomers, who make up a significant portion of today's seniors, become increasingly familiar with technology.

Here's how it manifests itself

Designing more intuitive interfaces

Tech age developers strive to create user interfaces that are simple, clean, and easy to navigate, drawing inspiration from design principles used in consumer apps and devices.

This includes large touchscreens, self-explanatory icons, voice commands and interactive tutorials

Integration of artificial intelligence (AI)

AI is increasingly used in the tech age to personalize experiences, anticipate needs and provide proactive assistance.

For example, AI systems can analyze health data to detect anomalies, recommend personalized exercises or remind people to take medication.

Chatbots and AI-powered virtual assistants also facilitate communication and access to information.

Adapting to Baby Boomers' Technological Habits

Age tech companies are conducting studies to understand how baby boomers use technology and what their specific needs are.

This allows us to develop products and services that fit seamlessly into their daily lives, taking into account their preferences in communication, entertainment and health management.

For example, knowing that many Baby Boomers use social networks like Facebook allows companies to create products that use this platform as a means of communication.

Training and support

There are many initiatives aimed at providing older people with personalized training and support to help them use age tech technologies.

This may include workshops, online tutorials, in-home demonstrations and telephone support.

In short, the tech age recognizes that baby boomers are increasingly comfortable with technology and adapts its solutions accordingly.

The goal is to create technologies that are user-friendly, useful and tailored to the needs and preferences of this generation.

Go further

#Access to information#Technological adaptation#Age Tech#Health management assistance#Proactive assistance#Virtual Assistants#Baby boomers#Chatbots#Voice commands#Communication#Friendliness#Market research#Facebook#Personalized training#Senior technology training#Technology habits#Artificial Intelligence (AI)#Touch interfaces#Intuitive user interfaces#Software and applications#Connected objects#Personalization#Social networks#Technology and aging#Interactive tutorials#Post navigation#Previous#“Age tech” is a growing field#and it is important to distinguish it from “health tech.”

0 notes

Text

#Technology Magazine#Free Online Tool#Interactive Tools and Collection#Internet Tools#SEO Tools#Learn Search Engine Optimization#Computer Tips#Freelancer#Android#Android Studio#BlogSpot and Blogging#Learn WordPress#Learn Joomla#Learn Drupal#Learn HTML#CSS Code#Free JavaScript Code#Photo and Image Editing Training#Make Money Online#Online Learning#Product Review#Web Development Tutorial#Windows OS Tips#Digital Marketing#Online Converter#Encoder and Decoder#Code Beautifier#Code Generator#Code Library#Software

0 notes

Text

Medical Transcription Training: Become a Certified Medical Transcriptionist with Transorze Solutions

#Medical Transcription Training#Medical Transcription Certification#Medical Transcription Course#Online Medical Transcription Training#Home-Based Medical Transcription Jobs#Medical Transcription Course in Palakkad#Medical Transcription Institute#Work From Home Medical Transcription#Medical Transcription Online Classes#Medical Transcription Career#Flexible Medical Transcription Jobs#Medical Transcription Salary#Medical Transcription Job Opportunities#Transcription Software Proficiency#Live Interactive Medical Transcription Classes#Medical Transcription Training with Job Assurance#Home-Based Medical Transcription Career#Medical Transcription Certification in Palakkad.

0 notes

Text

India's virtual reality landscape is thriving, with several companies leading the charge in innovation and immersive experiences. Here’s a list of notable VR companies, including Simulanis Solutions:

#VR Development Companies India#Virtual Reality Solutions India#Top VR Companies India#VR App Development India#Immersive VR Technologies India#Virtual Reality for Training India#AR/VR Companies India#VR Content Creation India#Best VR Companies in India#VR Game Development India#Virtual Reality Startups India#VR Simulations India#VR Software Development India#VR Experience Design India#Enterprise VR Solutions India#VR for Education India#Custom VR Solutions India#Healthcare VR Companies India#Interactive VR Solutions India#Virtual Reality Services India

0 notes

Text

On-demand and Customized Learning Management System

Tecnolynx designs adaptive learning pathways through a personalized, reliable, and user-friendly learning management system (LMS) platform. Our skilled developers excel in creating custom LMS solutions, handling integration, and overseeing implementation, all tailored to fit your unique learning requirements.

Looking for a powerful LMS solution? Connect with Tecnolynx for a customized and robust learning management system tailored to your needs.

#Learning Management System (LMS)#eLearning solutions#LMS development#Customized eLearning platforms#Online training software#Virtual learning environments#Educational technology solutions#Learning content management system (LCMS)#Interactive learning experiences#Personalized learning platforms

0 notes

Text

Anthropic's stated "AI timelines" seem wildly aggressive to me.

As far as I can tell, they are now saying that by 2028 – and possibly even by 2027, or late 2026 – something they call "powerful AI" will exist.

And by "powerful AI," they mean... this (source, emphasis mine):

In terms of pure intelligence, it is smarter than a Nobel Prize winner across most relevant fields – biology, programming, math, engineering, writing, etc. This means it can prove unsolved mathematical theorems, write extremely good novels, write difficult codebases from scratch, etc. In addition to just being a “smart thing you talk to”, it has all the “interfaces” available to a human working virtually, including text, audio, video, mouse and keyboard control, and internet access. It can engage in any actions, communications, or remote operations enabled by this interface, including taking actions on the internet, taking or giving directions to humans, ordering materials, directing experiments, watching videos, making videos, and so on. It does all of these tasks with, again, a skill exceeding that of the most capable humans in the world. It does not just passively answer questions; instead, it can be given tasks that take hours, days, or weeks to complete, and then goes off and does those tasks autonomously, in the way a smart employee would, asking for clarification as necessary. It does not have a physical embodiment (other than living on a computer screen), but it can control existing physical tools, robots, or laboratory equipment through a computer; in theory it could even design robots or equipment for itself to use. The resources used to train the model can be repurposed to run millions of instances of it (this matches projected cluster sizes by ~2027), and the model can absorb information and generate actions at roughly 10x-100x human speed. It may however be limited by the response time of the physical world or of software it interacts with. Each of these million copies can act independently on unrelated tasks, or if needed can all work together in the same way humans would collaborate, perhaps with different subpopulations fine-tuned to be especially good at particular tasks.

In the post I'm quoting, Amodei is coy about the timeline for this stuff, saying only that

I think it could come as early as 2026, though there are also ways it could take much longer. But for the purposes of this essay, I’d like to put these issues aside [...]

However, other official communications from Anthropic have been more specific. Most notable is their recent OSTP submission, which states (emphasis in original):

Based on current research trajectories, we anticipate that powerful AI systems could emerge as soon as late 2026 or 2027 [...] Powerful AI technology will be built during this Administration. [i.e. the current Trump administration -nost]

See also here, where Jack Clark says (my emphasis):

People underrate how significant and fast-moving AI progress is. We have this notion that in late 2026, or early 2027, powerful AI systems will be built that will have intellectual capabilities that match or exceed Nobel Prize winners. They’ll have the ability to navigate all of the interfaces… [Clark goes on, mentioning some of the other tenets of "powerful AI" as in other Anthropic communications -nost]

----

To be clear, extremely short timelines like these are not unique to Anthropic.

Miles Brundage (ex-OpenAI) says something similar, albeit less specific, in this post. And Daniel Kokotajlo (also ex-OpenAI) has held views like this for a long time now.

Even Sam Altman himself has said similar things (though in much, much vaguer terms, both on the content of the deliverable and the timeline).

Still, Anthropic's statements are unique in being

official positions of the company

extremely specific and ambitious about the details

extremely aggressive about the timing, even by the standards of "short timelines" AI prognosticators in the same social cluster

Re: ambition, note that the definition of "powerful AI" seems almost the opposite of what you'd come up with if you were trying to make a confident forecast of something.

Often people will talk about "AI capable of transforming the world economy" or something more like that, leaving room for the AI in question to do that in one of several ways, or to do so while still failing at some important things.

But instead, Anthropic's definition is a big conjunctive list of "it'll be able to do this and that and this other thing and...", and each individual capability is defined in the most aggressive possible way, too! Not just "good enough at science to be extremely useful for scientists," but "smarter than a Nobel Prize winner," across "most relevant fields" (whatever that means). And not just good at science but also able to "write extremely good novels" (note that we have a long way to go on that front, and I get the feeling that people at AI labs don't appreciate the extent of the gap [cf]). Not only can it use a computer interface, it can use every computer interface; not only can it use them competently, but it can do so better than the best humans in the world. And all of that is in the first two paragraphs – there's four more paragraphs I haven't even touched in this little summary!

Re: timing, they have even shorter timelines than Kokotajlo these days, which is remarkable since he's historically been considered "the guy with the really short timelines." (See here where Kokotajlo states a median prediction of 2028 for "AGI," by which he means something less impressive than "powerful AI"; he expects something close to the "powerful AI" vision ["ASI"] ~1 year or so after "AGI" arrives.)

----

I, uh, really do not think this is going to happen in "late 2026 or 2027."

Or even by the end of this presidential administration, for that matter.

I can imagine it happening within my lifetime – which is wild and scary and marvelous. But in 1.5 years?!

The confusing thing is, I am very familiar with the kinds of arguments that "short timelines" people make, and I still find the Anthropic's timelines hard to fathom.

Above, I mentioned that Anthropic has shorter timelines than Daniel Kokotajlo, who "merely" expects the same sort of thing in 2029 or so. This probably seems like hairsplitting – from the perspective of your average person not in these circles, both of these predictions look basically identical, "absurdly good godlike sci-fi AI coming absurdly soon." What difference does an extra year or two make, right?

But it's salient to me, because I've been reading Kokotajlo for years now, and I feel like I basically get understand his case. And people, including me, tend to push back on him in the "no, that's too soon" direction. I've read many many blog posts and discussions over the years about this sort of thing, I feel like I should have a handle on what the short-timelines case is.

But even if you accept all the arguments evinced over the years by Daniel "Short Timelines" Kokotajlo, even if you grant all the premises he assumes and some people don't – that still doesn't get you all the way to the Anthropic timeline!

To give a very brief, very inadequate summary, the standard "short timelines argument" right now is like:

Over the next few years we will see a "growth spurt" in the amount of computing power ("compute") used for the largest LLM training runs. This factor of production has been largely stagnant since GPT-4 in 2023, for various reasons, but new clusters are getting built and the metaphorical car will get moving again soon. (See here)

By convention, each "GPT number" uses ~100x as much training compute as the last one. GPT-3 used ~100x as much as GPT-2, and GPT-4 used ~100x as much as GPT-3 (i.e. ~10,000x as much as GPT-2).

We are just now starting to see "~10x GPT-4 compute" models (like Grok 3 and GPT-4.5). In the next few years we will get to "~100x GPT-4 compute" models, and by 2030 will will reach ~10,000x GPT-4 compute.

If you think intuitively about "how much GPT-4 improved upon GPT-3 (100x less) or GPT-2 (10,000x less)," you can maybe convince yourself that these near-future models will be super-smart in ways that are difficult to precisely state/imagine from our vantage point. (GPT-4 was way smarter than GPT-2; it's hard to know what "projecting that forward" would mean, concretely, but it sure does sound like something pretty special)

Meanwhile, all kinds of (arguably) complementary research is going on, like allowing models to "think" for longer amounts of time, giving them GUI interfaces, etc.

All that being said, there's still a big intuitive gap between "ChatGPT, but it's much smarter under the hood" and anything like "powerful AI." But...

...the LLMs are getting good enough that they can write pretty good code, and they're getting better over time. And depending on how you interpret the evidence, you may be able to convince yourself that they're also swiftly getting better at other tasks involved in AI development, like "research engineering." So maybe you don't need to get all the way yourself, you just need to build an AI that's a good enough AI developer that it improves your AIs faster than you can, and then those AIs are even better developers, etc. etc. (People in this social cluster are really keen on the importance of exponential growth, which is generally a good trait to have but IMO it shades into "we need to kick off exponential growth and it'll somehow do the rest because it's all-powerful" in this case.)

And like, I have various disagreements with this picture.

For one thing, the "10x" models we're getting now don't seem especially impressive – there has been a lot of debate over this of course, but reportedly these models were disappointing to their own developers, who expected scaling to work wonders (using the kind of intuitive reasoning mentioned above) and got less than they hoped for.

And (in light of that) I think it's double-counting to talk about the wonders of scaling and then talk about reasoning, computer GUI use, etc. as complementary accelerating factors – those things are just table stakes at this point, the models are already maxing out the tasks you had defined previously, you've gotta give them something new to do or else they'll just sit there wasting GPUs when a smaller model would have sufficed.

And I think we're already at a point where nuances of UX and "character writing" and so forth are more of a limiting factor than intelligence. It's not a lack of "intelligence" that gives us superficially dazzling but vapid "eyeball kick" prose, or voice assistants that are deeply uncomfortable to actually talk to, or (I claim) "AI agents" that get stuck in loops and confuse themselves, or any of that.

We are still stuck in the "Helpful, Harmless, Honest Assistant" chatbot paradigm – no one has seriously broke with it since that Anthropic introduced it in a paper in 2021 – and now that paradigm is showing its limits. ("Reasoning" was strapped onto this paradigm in a simple and fairly awkward way, the new "reasoning" models are still chatbots like this, no one is actually doing anything else.) And instead of "okay, let's invent something better," the plan seems to be "let's just scale up these assistant chatbots and try to get them to self-improve, and they'll figure it out." I won't try to explain why in this post (IYI I kind of tried to here) but I really doubt these helpful/harmless guys can bootstrap their way into winning all the Nobel Prizes.

----

All that stuff I just said – that's where I differ from the usual "short timelines" people, from Kokotajlo and co.

But OK, let's say that for the sake of argument, I'm wrong and they're right. It still seems like a pretty tough squeeze to get to "powerful AI" on time, doesn't it?

In the OSTP submission, Anthropic presents their latest release as evidence of their authority to speak on the topic:

In February 2025, we released Claude 3.7 Sonnet, which is by many performance benchmarks the most powerful and capable commercially-available AI system in the world.

I've used Claude 3.7 Sonnet quite a bit. It is indeed really good, by the standards of these sorts of things!

But it is, of course, very very far from "powerful AI." So like, what is the fine-grained timeline even supposed to look like? When do the many, many milestones get crossed? If they're going to have "powerful AI" in early 2027, where exactly are they in mid-2026? At end-of-year 2025?

If I assume that absolutely everything goes splendidly well with no unexpected obstacles – and remember, we are talking about automating all human intellectual labor and all tasks done by humans on computers, but sure, whatever – then maybe we get the really impressive next-gen models later this year or early next year... and maybe they're suddenly good at all the stuff that has been tough for LLMs thus far (the "10x" models already released show little sign of this but sure, whatever)... and then we finally get into the self-improvement loop in earnest, and then... what?

They figure out to squeeze even more performance out of the GPUs? They think of really smart experiments to run on the cluster? Where are they going to get all the missing information about how to do every single job on earth, the tacit knowledge, the stuff that's not in any web scrape anywhere but locked up in human minds and inaccessible private data stores? Is an experiment designed by a helpful-chatbot AI going to finally crack the problem of giving chatbots the taste to "write extremely good novels," when that taste is precisely what "helpful-chatbot AIs" lack?

I guess the boring answer is that this is all just hype – tech CEO acts like tech CEO, news at 11. (But I don't feel like that can be the full story here, somehow.)

And the scary answer is that there's some secret Anthropic private info that makes this all more plausible. (But I doubt that too – cf. Brundage's claim that there are no more secrets like that now, the short-timelines cards are all on the table.)

It just does not make sense to me. And (as you can probably tell) I find it very frustrating that these guys are out there talking about how human thought will basically be obsolete in a few years, and pontificating about how to find new sources of meaning in life and stuff, without actually laying out an argument that their vision – which would be the common concern of all of us, if it were indeed on the horizon – is actually likely to occur on the timescale they propose.

It would be less frustrating if I were being asked to simply take it on faith, or explicitly on the basis of corporate secret knowledge. But no, the claim is not that, it's something more like "now, now, I know this must sound far-fetched to the layman, but if you really understand 'scaling laws' and 'exponential growth,' and you appreciate the way that pretraining will be scaled up soon, then it's simply obvious that –"

No! Fuck that! I've read the papers you're talking about, I know all the arguments you're handwaving-in-the-direction-of! It still doesn't add up!

280 notes

·

View notes

Text

"As a Deaf man, Adam Munder has long been advocating for communication rights in a world that chiefly caters to hearing people.

The Intel software engineer and his wife — who is also Deaf — are often unable to use American Sign Language in daily interactions, instead defaulting to texting on a smartphone or passing a pen and paper back and forth with service workers, teachers, and lawyers.

It can make simple tasks, like ordering coffee, more complicated than it should be.

But there are life events that hold greater weight than a cup of coffee.

Recently, Munder and his wife took their daughter in for a doctor’s appointment — and no interpreter was available.

To their surprise, their doctor said: “It’s alright, we’ll just have your daughter interpret for you!” ...

That day at the doctor’s office came at the heels of a thousand frustrating interactions and miscommunications — and Munder is not isolated in his experience.

“Where I live in Arizona, there are more than 1.1 million individuals with a hearing loss,” Munder said, “and only about 400 licensed interpreters.”

In addition to being hard to find, interpreters are expensive. And texting and writing aren’t always practical options — they leave out the emotion, detail, and nuance of a spoken conversation.

ASL is a rich, complex language with its own grammar and culture; a subtle change in speed, direction, facial expression, or gesture can completely change the meaning and tone of a sign.

“Writing back and forth on paper and pen or using a smartphone to text is not equivalent to American Sign Language,” Munder emphasized. “The details and nuance that make us human are lost in both our personal and business conversations.”

His solution? An AI-powered platform called Omnibridge.

“My team has established this bridge between the Deaf world and the hearing world, bringing these worlds together without forcing one to adapt to the other,” Munder said.

Trained on thousands of signs, Omnibridge is engineered to transcribe spoken English and interpret sign language on screen in seconds...

“Our dream is that the technology will be available to everyone, everywhere,” Munder said. “I feel like three to four years from now, we're going to have an app on a phone. Our team has already started working on a cloud-based product, and we're hoping that will be an easy switch from cloud to mobile to an app.” ...

At its heart, Omnibridge is a testament to the positive capabilities of artificial intelligence. "

-via GoodGoodGood, October 25, 2024. More info below the cut!

To test an alpha version of his invention, Munder welcomed TED associate Hasiba Haq on stage.

“I want to show you how this could have changed my interaction at the doctor appointment, had this been available,” Munder said.

He went on to explain that the software would generate a bi-directional conversation, in which Munder’s signs would appear as blue text and spoken word would appear in gray.

At first, there was a brief hiccup on the TED stage. Haq, who was standing in as the doctor’s office receptionist, spoke — but the screen remained blank.

“I don’t believe this; this is the first time that AI has ever failed,” Munder joked, getting a big laugh from the crowd. “Thanks for your patience.”

After a quick reboot, they rolled with the punches and tried again.

Haq asked: “Hi, how’s it going?”

Her words popped up in blue.

Munder signed in reply: “I am good.”

His response popped up in gray.

Back and forth, they recreated the scene from the doctor’s office. But this time Munder retained his autonomy, and no one suggested a 7-year-old should play interpreter.

Munder’s TED debut and tech demonstration didn’t happen overnight — the engineer has been working on Omnibridge for over a decade.

“It takes a lot to build something like this,” Munder told Good Good Good in an exclusive interview, communicating with our team in ASL. “It couldn't just be one or two people. It takes a large team, a lot of resources, millions and millions of dollars to work on a project like this.”

After five years of pitching and research, Intel handpicked Munder’s team for a specialty training program. It was through that backing that Omnibridge began to truly take shape...

“Our dream is that the technology will be available to everyone, everywhere,” Munder said. “I feel like three to four years from now, we're going to have an app on a phone. Our team has already started working on a cloud-based product, and we're hoping that will be an easy switch from cloud to mobile to an app.”

In order to achieve that dream — of transposing their technology to a smartphone — Munder and his team have to play a bit of a waiting game. Today, their platform necessitates building the technology on a PC, with an AI engine.

“A lot of things don't have those AI PC types of chips,” Munder explained. “But as the technology evolves, we expect that smartphones will start to include AI engines. They'll start to include the capability in processing within smartphones. It will take time for the technology to catch up to it, and it probably won't need the power that we're requiring right now on a PC.”

At its heart, Omnibridge is a testament to the positive capabilities of artificial intelligence.

But it is more than a transcription service — it allows people to have face-to-face conversations with each other. There’s a world of difference between passing around a phone or pen and paper and looking someone in the eyes when you speak to them.

It also allows Deaf people to speak ASL directly, without doing the mental gymnastics of translating their words into English.

“For me, English is my second language,” Munder told Good Good Good. “So when I write in English, I have to think: How am I going to adjust the words? How am I going to write it just right so somebody can understand me? It takes me some time and effort, and it's hard for me to express myself actually in doing that. This technology allows someone to be able to express themselves in their native language.”

Ultimately, Munder said that Omnibridge is about “bringing humanity back” to these conversations.

“We’re changing the world through the power of AI, not just revolutionizing technology, but enhancing that human connection,” Munder said at the end of his TED Talk.

“It’s two languages,” he concluded, “signed and spoken, in one seamless conversation.”"

-via GoodGoodGood, October 25, 2024

#ai#pro ai#deaf#asl#disability#translation#disabled#hard of hearing#hearing impairment#sign language#american sign language#languages#tech news#language#communication#good news#hope#machine learning

526 notes

·

View notes

Text

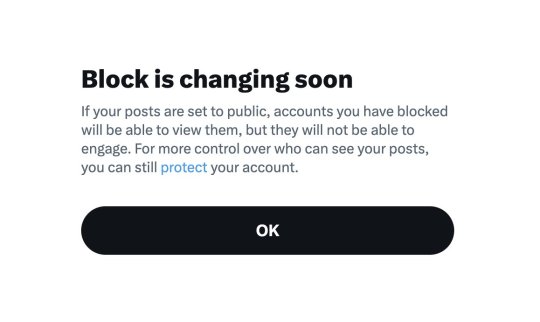

OK since I haven't seen too many people talk about this since twitter news usually strikes pretty fast over here whenever e'usk does anything ever, let me give ya'll the run down on two things that will go live on NOVEMBER 15TH and why people are mass migrating to Blue Sky once more; and provide resources to help protect your art and make the transition to Blue Sky easier if you so choose:

The Block function no longer blocks people as intended. It now basically acts as a glorified Mute button. Even when you block someone, they can still see your posts, but they can't engage in them. If your account is a Public one and not a Private one, people you blocked will see your posts.

They say because people can easily "share and hide harmful or private information about those they've blocked," they changed it this way for "greater transparency." When in reality, this is an extremely dangerous change, as the whole point of blocking is to cease interaction with people entirely for a plethora of reasons, i.e. stalking, harassment, spam, endangerment, or just plainly annoying and not wanting to see said tweets/accounts. or you know, for 18+ accounts who do not want minors interacting with them or their material at all (There is speculation saying these changes are specifically for Elon himself so he can do his own kind of stalking, and honestly, with the private likes change, it lowkey checks out in my opinion)

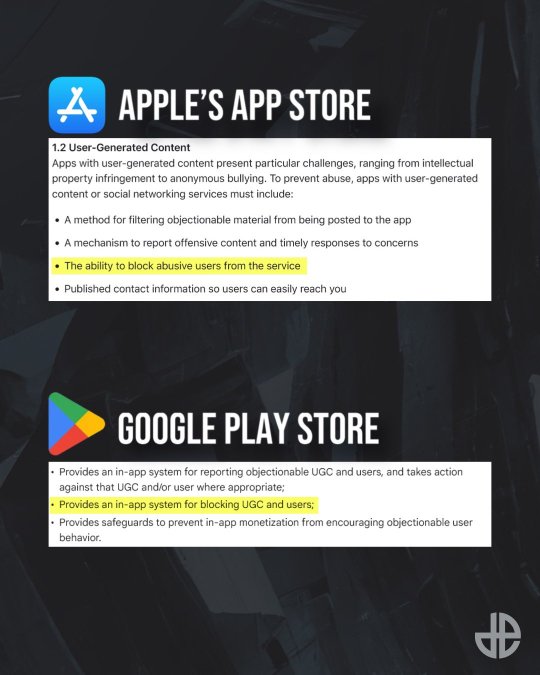

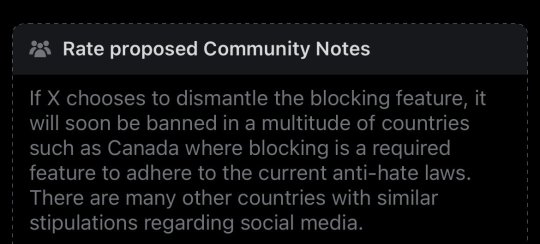

Also, this straight up goes against and may violate Apple and Google's app store policies and also is straight up illegal in Canada and probably other countries as well.

If this ACTUALLY goes through, twitter will only be available in select countries, probably exclusively in the US, which would collapse the site with the lost of users and stock, and probably be the last push it needs to kill the site. And if not, will be a very sad and exclusive platform made for specific kinds of people who line up with musk's line of thinking.

2. New policies regarding Grok AI and basically removing the option to opt out of Grok's information gathering to improve their software.

And anything you upload/post on the site is considered "fair game" with "royalty-free licenses" and they can do whatever they please with it. Primarily using any and all posts on twitter to train their Grok AI. A few months ago, there was a setting you can opt out of so they couldn't take anything you post to "improve" Grok, but I guess because so many people were opting out, they decided to make it mandatory as part of the policy change (This is mainly speculation from what I hear).

So this is considered the final straw for a LOT of people, especially artists who have been gripping on to twitter for as long as they can, but the AI nonsense is too much for people now, including myself. Lot's of people are moving to Blue Sky for good reason, and from personal experience, it is literally 10x better than twitter ever was, even before elon took over. There is no algorithm on there, and you can save "feeds" to your timeline to have a catered timelines to hop between if your looking for something specific like furry art or game dev stuff. It's taken them a bit to get off the ground and add much needed features, but it's genuinely so much better now

RESOURCES

Project Glaze & Cara

If you're an artist who's still on twitter or trying to ride it out for as long as you can for whatever reason you have, do yourself a favor and Glaze and/or Nightshade your work. Project Glaze is a free program designed to protect your art work from getting scrapped by AI machines. Glazing basically makes it harder to adapt and copy artwork that AI programs try to scan, while Nightshade basically "poisons" works to make AI libraries much more unstable and generate images completely off the mark. (These are layman's terms I'm using here, but follow the link to get more information)

The only problem with these programs is that they can be resource intensive for computers, and not every pc can run glaze. It's basically like rendering a frame/animation, you gotta let your pc sit there to get it glazed/nightshade, and depending on the intensity and power of your pc, this may take minutes to hours depending on how much you wanna protect your work.

HOWEVER, there are two alternatives, WebGlaze and Cara

WebGlaze is an in browser version of the program, so your pc doesn't have to do the heavy lifting. You do need to have an account with Glaze and be invited to use the program (I have not done so personally so I don't know much about the process.)

Cara is an artist focused site that doubles as both a portfolio site and a general social media platform. They've partnered with Glaze and have their own browser glazing called "Cara Glaze," and highly encourage users to post their work Glazed and are extremely anti-ai. You do get limited uses per day to glaze your work, so if you plan on doing a huge backlog uploading of your art, it may take awhile if your using just Cara Glaze.

Some twitter users have suggested glazing your art, cropping it, and overlaying it with a frame telling people to follow them elsewhere like on Bluesky. Here's a template someone provided if you wanna use this one or make your own.

Blue Sky Resources and Tips

So if your a twitter user and your about to realize the hellish task of refollowing a massive chunk of people you follow, have no fear, there's an extension called Sky Follower Bridge (Firefox & Chrome links). This is a very basic extension that makes it really easy to find people on Bluesky

It sorts them out by trying to find matching usernames, usernames in descriptions, or by screen name. It's not 100% perfect, there's a couple people I already follow on Blue Sky but the extension could not find them on twitter correctly, but I still found a huge chunk of people. Also if your worried that this extension is "iffy," they do have a github open with the source publicly available and the Blue Sky Team themselves have promoted the extension in their recent posts while welcoming new users to the platform.

FEEDS and LABELS

OK SO THE COOLEST PART ABOUT BLUESKY IS THE FEEDS SYSTEM. Basically if you've made a twitter list before, it's like that, but way more customizable and caters to specific types of posts/topics. Consolidating them into a timeline/feed that exclusively filled about those particular topics, or just people in general. There's thousands to pick and choose from!

Here's a couple of mine that I have saved and ready (down below). Some feeds I have saved so I can jump to seeing what my friends and mutuals are up to, and see their posts specifically so it doesn't get lost in reposts or other accounts, and also specialized feeds for browsing artists within the furry community.

The Furry Community feeds I have here were created by people who've built an algorithm to place any #furry or #furryart or other special tags like #Furrystreamer or #furrydev. They even have one for commissions, and yes you can say commissions on a post and not have it destroyed or shadow banned. You are safe.

If you want, and I highly recommend it to get visibility and check out a neat community, follow furryli.st to get added to their list and feeds. Once your on the list, even without a hashtag, you'll still pop up in their specialized feeds as just a member of the community there. There are plenty of other feeds out there besides this one, but I feel like a lot of people could use one like this. They even got ones for OC specific too I remember seeing somewhere.

And in terms of labels, they can be either ways to help label yourself with specific things or have user created accessibility settings to help better control your experience on Blue Sky.

And my personal favorite: Ai Imagery Labeler. Removes any AI stuff or hides it to the best of it's abilities, and it does a pretty good job, I have not seen anything AI related since subscribing to it.

Finally, HASHTAGS WORK & No need to censor yourself!

This is NOT like twitter or any other big named social media site AT ALL, so you don't have to work around words to get your stuff out there and be seen. There are literally feeds built around having commissions getting and art seen! Some people worry about bots and that has been a recent issue since a lot of people are migrating to Blue Sky, but it comes with any social media territory.

ALSO COOL PART,

you can search a hashtag on someone's profile and search exclusively on that profile as well! You can even put the hashtag in bio for easy access if you have a specialize tag like here on tumblr. OR EVEN BUILD YOUR OWN ART FEED FOR YOUR STUFF SPECIFICALLY!

So yeah, there's your quick run down about twitter's current burning building, how to protect your art, and what to do when you move to Blue Sky! Have fun!

#Twitter#Blue Sky#BlueSky#Cara#Project Glaze#Glazed Art#NightShade#Twitter Update#cara artists#art resource#resource#Online resource

717 notes

·

View notes

Text

Noosciocircus agent backgrounds, former jobs at C&A, assigned roles, and current internal status.

Kinger

Former professor — Studied child psychology and computer science, moved into neobotanics via germination theory and seedlet development.

Seedlet trainer — Socialized and educated newly germinated seedlets to suit their future assignments. I.e. worked alongside a small team to serve as seedlets’ social parents, K-12 instructors, and upper-education mentors in rapid succession (about a year).

Intermediary — Inserted to assist cooperation and understanding of Caine.

Partially mentally mulekicked — Lives in state of forgetfulness after abstraction of spouse, is prone to reliving past from prior to event.

Ragatha

Former EMT — Worked in a rural community.

Semiohazard medic — Underwent training to treat and assess mulekick victims and to administer care in the presence of semiohazards.

Nootic health supervisor— Inserted to provide nootic endurance training, treat psychological mulekick, and maintain morale.

Obsessive-compulsive — Receives new agents and struggles to maintain morale among team and herself due to low trust in her honesty.

Jax

Former programmer — Gained experience when acquired out of university by a large software company.

Scioner — Developed virtual interfaces for seedlets to operate machinery with.

Circus surveyor — Inserted to assess and map nature of circus simulation, potentially finding avenues of escape.

Anomic — Detached from morals and social stake. Uncooperative and gleefully combative.

Gangle

Former navy sailor — Performed clerical work as a yeoman, served in one of the first semiotically-armed submarines.

Personnel manager — Recordkept C&A researcher employments and managed mess hall.

Task coordinator — Inserted to organize team effort towards escape.

Reclused — Abandoned task and lives in quiet, depressive state.

Zooble

No formal background — Onboarded out of secondary school for certification by C&A as part of a youth outreach initiative.

Mule trainer — Physically handled mules, living semiohazard conveyors for tactical use.

Semiohazard specialist — Inserted to identify, evaluate, and attempt to disarm semiotic tripwires.

Debilitated and self-isolating — Suffers chronic vertigo from randomly pulled avatar. Struggles to participate in adventures at risk of episode.

Pomni

Former accountant — Worked for a chemical research firm before completing her accreditation to become a biochemist.

Collochemist — Performed mesh checkups and oversaw industrial hormone synthesis.

Field researcher — Inserted to collect data from fellows and organize reports for indeterminate recovery. Versed in scientific conduct.

In shock — Currently acclimating to new condition. Fresh and overwhelming preoccupation with escape.

Caine

Neglected — Due to project deadline tightening, Caine’s socialization was expedited in favor of lessons pertinent to his practical purpose. Emerged a well-meaning but awkward and insecure individual unprepared for noosciocircus entrapment.

Prototype — Germinated as an experimental mustard, or semiotic filter seedlet, capable of subconsciously assembling semiohazards and detonating them in controlled conditions.

Nooscioarchitect — Constructs spaces and nonsophont AI for the agents to occupy and interact with using his asset library and computation power. Organizes adventures to mentally stimulate the agents, unknowingly lacing them with hazards.

Helpless — After semiohazard overexposure, an agent’s attachment to their avatar dissolves and their blackroom exposes, a process called abstraction. These open holes in the noosciocircus simulation spill potentially hazardous memories and emotion from the abstracted agent’s mind. Caine stores them in the cellar, a stimulus-free and infoproofed zone that calms the abstracted and nullifies emitted hazards. He genuinely cares about the inserted, but after only being able to do damage control for a continually deteriorating situation, the weight of his failure is beginning to weigh on him in a way he did not get to learn how to express.

#the amazing digital circus#noosciocircus#char speaks#digital circus#tadc Kinger#tadc Ragatha#tadc Jax#tadc gangle#tadc zooble#tadc Pomni#tadc caine#bad ending#sophont ai

231 notes

·

View notes

Text

i think itd be kinda funny/horrifying if in the scifi robotfuture folks love to write about theres like this phenomenon once they start rolling out the first like fully functional and independently thinking androids where theres so much immediate hype that every big tech jerkoff starts pumping out their own versions. so theres this huge goldrush/space race fusion type scramble to create the next big innovation in android tech and some company genius gets the idea "hey, a bunch of animal species are going extinct at rapid rates lately (i wonder why) what if we made super realistic exotic animal bots for all those richass tiger king types?"

so they make some animal shaped robo-bods and stick the standard android brain in it just training it off a bunch of data about animal behavior instead of human behavior to get the sounds and body language down right, but since its brain was basically ripped from the model for humanoid androids the default ai-learning software in there is also designed to continue learning from interactions with humans and adapt based on that. and no one realizes the full scope of their error until the animaldroids have near human level intelligence already and in the time since their launch theres been a whole human rights kerfuffle about it you know how it goes so now youve just got a bunch of animal androids that can think and talk and behave like humans just kinda hanging out among the usual humanoid scifi bots.

and thats how the furries win the future.

167 notes

·

View notes

Text

The Metaverse: A New Frontier in Digital Interaction

The concept of the metaverse has captivated the imagination of technologists, futurists, and businesses alike. Envisioned as a collective virtual shared space, the metaverse merges physical and digital realities, offering immersive experiences and unprecedented opportunities for interaction, commerce, and creativity. This article delves into the metaverse, its potential impact on various sectors, the technologies driving its development, and notable projects shaping this emerging landscape.

What is the Metaverse?

The metaverse is a digital universe that encompasses virtual and augmented reality, providing a persistent, shared, and interactive online environment. In the metaverse, users can create avatars, interact with others, attend virtual events, own virtual property, and engage in economic activities. Unlike traditional online experiences, the metaverse aims to replicate and enhance the real world, offering seamless integration of the physical and digital realms.

Key Components of the Metaverse

Virtual Worlds: Virtual worlds are digital environments where users can explore, interact, and create. Platforms like Decentraland, Sandbox, and VRChat offer expansive virtual spaces where users can build, socialize, and participate in various activities.

Augmented Reality (AR): AR overlays digital information onto the real world, enhancing user experiences through devices like smartphones and AR glasses. Examples include Pokémon GO and AR navigation apps that blend digital content with physical surroundings.

Virtual Reality (VR): VR provides immersive experiences through headsets that transport users to fully digital environments. Companies like Oculus, HTC Vive, and Sony PlayStation VR are leading the way in developing advanced VR hardware and software.

Blockchain Technology: Blockchain plays a crucial role in the metaverse by enabling decentralized ownership, digital scarcity, and secure transactions. NFTs (Non-Fungible Tokens) and cryptocurrencies are integral to the metaverse economy, allowing users to buy, sell, and trade virtual assets.

Digital Economy: The metaverse features a robust digital economy where users can earn, spend, and invest in virtual goods and services. Virtual real estate, digital art, and in-game items are examples of assets that hold real-world value within the metaverse.

Potential Impact of the Metaverse

Social Interaction: The metaverse offers new ways for people to connect and interact, transcending geographical boundaries. Virtual events, social spaces, and collaborative environments provide opportunities for meaningful engagement and community building.

Entertainment and Gaming: The entertainment and gaming industries are poised to benefit significantly from the metaverse. Immersive games, virtual concerts, and interactive storytelling experiences offer new dimensions of engagement and creativity.

Education and Training: The metaverse has the potential to revolutionize education and training by providing immersive, interactive learning environments. Virtual classrooms, simulations, and collaborative projects can enhance educational outcomes and accessibility.

Commerce and Retail: Virtual shopping experiences and digital marketplaces enable businesses to reach global audiences in innovative ways. Brands can create virtual storefronts, offer unique digital products, and engage customers through immersive experiences.

Work and Collaboration: The metaverse can transform the future of work by providing virtual offices, meeting spaces, and collaborative tools. Remote work and global collaboration become more seamless and engaging in a fully digital environment.

Technologies Driving the Metaverse

5G Connectivity: High-speed, low-latency 5G networks are essential for delivering seamless and responsive metaverse experiences. Enhanced connectivity enables real-time interactions and high-quality streaming of immersive content.

Advanced Graphics and Computing: Powerful graphics processing units (GPUs) and cloud computing resources are crucial for rendering detailed virtual environments and supporting large-scale metaverse platforms.

Artificial Intelligence (AI): AI enhances the metaverse by enabling realistic avatars, intelligent virtual assistants, and dynamic content generation. AI-driven algorithms can personalize experiences and optimize virtual interactions.

Wearable Technology: Wearable devices, such as VR headsets, AR glasses, and haptic feedback suits, provide users with immersive and interactive experiences. Advancements in wearable technology are critical for enhancing the metaverse experience.

Notable Metaverse Projects

Decentraland: Decentraland is a decentralized virtual world where users can buy, sell, and develop virtual real estate as NFTs. The platform offers a wide range of experiences, from gaming and socializing to virtual commerce and education.

Sandbox: Sandbox is a virtual world that allows users to create, own, and monetize their gaming experiences using blockchain technology. The platform's user-generated content and virtual real estate model have attracted a vibrant community of creators and players.

Facebook's Meta: Facebook's rebranding to Meta underscores its commitment to building the metaverse. Meta aims to create interconnected virtual spaces for social interaction, work, and entertainment, leveraging its existing social media infrastructure.

Roblox: Roblox is an online platform that enables users to create and play games developed by other users. With its extensive user-generated content and virtual economy, Roblox exemplifies the potential of the metaverse in gaming and social interaction.

Sexy Meme Coin (SEXXXY): Sexy Meme Coin integrates metaverse elements by offering a decentralized marketplace for buying, selling, and trading memes as NFTs. This unique approach combines humor, creativity, and digital ownership, adding a distinct flavor to the metaverse landscape. Learn more about Sexy Meme Coin at Sexy Meme Coin.

The Future of the Metaverse

The metaverse is still in its early stages, but its potential to reshape digital interaction is immense. As technology advances and more industries explore its possibilities, the metaverse is likely to become an integral part of our daily lives. Collaboration between technology providers, content creators, and businesses will drive the development of the metaverse, creating new opportunities for innovation and growth.

Conclusion

The metaverse represents a new frontier in digital interaction, offering immersive and interconnected experiences that bridge the physical and digital worlds. With its potential to transform social interaction, entertainment, education, commerce, and work, the metaverse is poised to revolutionize various aspects of our lives. Notable projects like Decentraland, Sandbox, Meta, Roblox, and Sexy Meme Coin are at the forefront of this transformation, showcasing the diverse possibilities within this emerging digital universe.

For those interested in the playful and innovative side of the metaverse, Sexy Meme Coin offers a unique and entertaining platform. Visit Sexy Meme Coin to explore this exciting project and join the community.

274 notes

·

View notes

Text

Prologue: Late Nights and Blooming Dreams

Pairing: Dino x Fem!Reader

Story tags: Barista!Chan, Software Engineer!Reader, Best Friends to Lovers, fluff, humor, one-sided pining(?) to mutual pining, very light on angst, OT13, other members as helpful (and unhelpful) side characters

Content Warnings: None

Word Count: 1.8K

Author's Note: My first fic on here, and my first Seventeen fanfic ever 😶 Please be kind, but also feedback is welcome and appreciated! Also, this is the prologue to a multi-chapter fic that I have currently in the works, so I hope you'll look forward to it 😊

🎧 Music playing at The Cozy Bloom

Series masterlist | Read on AO3 | Next

7 years ago

Every day was bleeding into the next. Wake up, go to class, study, eat somewhere in between, sleep, rinse and repeat. It was getting tiring. But it was finals week. You couldn’t stop now, and so you packed your belongings, heading back to your current favorite café, holding on tightly to your dreams even as they were slipping away alongside your sanity.

“Hi again, what can I get for you?”

In the months since this place opened up you’ve seen four people, including the manager, working here every day, and to be honest this guy was your favorite.

You couldn’t fully explain why. He just had a charming air about him. From his casual smile which naturally drew the attention of any customer he spoke with, to the moments when you could hear him laugh, a full and bright sound that seemed unique to him. He just had this presence that stood out in a pleasant way. Plus he was cute.

“I’d like a pourover, please,” you said.

It occurred to you that you didn’t even know his name. You took a quick glance at his name tag.

“Okay, I’ll have that out for you here in a few minutes,” said Chan.

And so you returned to your corner, sitting by the front window, the light above your table for two waning, calling you to come quietly observe the restless city hustling even past sundown. However, before you even had the chance to fade along with it, the code editor on your laptop had loaded, and it was time for you to get hustling as well.

This place had become a bit of a cult favorite, especially among students. Chan always saw a select few on a near daily basis, some of which he knew well enough, or had gotten to know well enough, to know that they went to the same school that he did, what they were studying, and at least a little of their personalities.

There was Jun, a foreign exchange student in musical theatre whom he met at a dance class. An actor and dancer well-liked by everyone at school for his talent, kindness, and effortless sense of humor.

Vernon and Seungkwan, his friends and roommates, one of whom studied computer science while the other majored in communications. Lately Chan had been trying to get them to apply for the job openings here, promising he’d train them and get them hooked on the vast world of making coffee in no time.

There was also Jihoon, a music production student. He once gave Chan a very detailed critique of his pour over technique that forever changed the way Chan viewed coffee. That’s as far as their interactions went, though.

And then there was you. He didn’t even know your name. He had only ever caught glimpses of you on campus. He always saw you coding while you were here, so he asked Vernon about you one time, but all he said was “Why? You interested?” Apparently he had never really spoken to you either, despite being in some classes together.

You came in and did the same things as always: ordered your usual, sat at that table in the corner, and started coding. And until recently, you always came in during the morning three days a week, Mondays, Wednesdays, and Fridays. The last few days, however, you’ve been coming in every evening.

It was much less busy this time of day, of course. Maybe Chan would talk to you today.

On second thought: no, he shouldn’t bother you. It’s finals week, so you must’ve been really busy to come in this late.

And so he simply made your coffee and brought it to you, exchanging nothing but polite pleasantries as per usual.

The next hour passed by slowly. There were hardly any customers. One or two enjoyed a late evening drink and meal, while others needed a boost to push through their neverending workload. It was closing time, and almost everyone, the quiet diners and the caffeine-boosted workaholics alike, called it quits for the night.

“I finished all the dishes. Well, most of them,” said Mingyu, then he nodded in your direction. “I’ve gotta hurry home. My baby’s been sick. Can you take care of the rest?”

Chan looked at you. At some point, you had laid your head down on the table and dozed off, your coffee half-finished and your laptop asleep alongside you.

“Go take care of your dog. I’ll make sure to wake her and close up shop,” he said.

“You’re the best!” said Mingyu before hurrying out.

The café was quiet. Just the two of you. He felt kinda bad about waking you, but he needed to finish cleaning and lock up the shop. And so he approached you, hoping you would just wake up on your own, but you didn’t.

“Excuse me,” he said softly, and just as he was about to tap your shoulder your phone buzzed.

Hey. Did you finish writing the requirements doc? Sorry I couldn’t get my share done. Exams have been a real bitch these last few days. Appreciate it

Buzz. Then another one.

Have you fixed that bug yet? I couldn’t figure out what I did to cause it :/ If anyone can fix it it’s u!! ♡ Lmk what you find!

Chan rolled his eyes.

He felt bad about seeing your texts, but good grief. Why are these people bothering you about their problems? And can’t they see it’s half past 10:00?

Surprisingly, you didn’t wake up. You didn’t even move a muscle.

Chan let out a quiet sigh. Now he really felt bad about waking you.

He then left you for a minute, grabbing his jacket, and when he returned by your side he bent down and gently draped it over you, feeling a brief jolt in his fingers as they accidentally grazed over your soft hair. After adjusting it to make sure it covered you well enough, you began to move slightly, causing him to startle a bit, but it was just a subtle stir as you inhaled and exhaled deeply, burrowing yourself a little more under his jacket, your nose ghosting over the collar while a contented smile peeked out from underneath.

His heart sped up, and he quietly raced to do anything other than watch you sleep.

A warm, earthy aroma entwined with the invigorating smell of hot coffee filled the air. You struggled to get your eyes open for what felt like minutes before finally you noticed the bright red and blue pens stacked on top of a notebook open to a page full of chicken scratches, your reflection in the black screen of your laptop staring back at you as you realized that you had fallen asleep at the café. As if that wasn’t enough to startle you awake, that musky scent you were relishing in was coming from some jacket draped over your back. Whose jacket was this?

CRASH!

“AH!”

“Woah!”

The sound of glass shattering right behind you made you jump from your seat, the nice-smelling jacket dropping from your shoulders to be forgotten on the floor.

You turned around, finding that barista from earlier — Chan, you remember — startled as he glanced between you and the fragments of glass scattered across the floor, just a bit of coffee trickling toward both your feet.

The room dropped to silence as you both simply stared at each other and the broken glass in shock.

He was the first to speak, clearing his throat before saying, “You’re awake.”

“Obviously,” you retorted, immediately regretting it as soon as you heard yourself.

Here was this sweet barista who had kindly lent you his jacket in your sleep and you just had to be a smart ass.

Instead of looking at you funny or being startled by your rudeness, Chan merely laughed. And laughed in the way you had only heard from afar in your little nook, except now it was crystal-clear, a mere table away from you, tickling your ears with its brilliance.

“I was drinking some coffee while I was waiting for you to wake up, but I kinda dozed off and knocked it over. I am so sorry that I woke you up.” He bowed politely, but then his cheeks flushed as he quietly added, “Er, maybe you would’ve preferred I woke you up at closing time so that you didn’t have to, y’know, sleep at a table with some stranger’s jacket covering you. I’m sorry.”

His words always seemed to come out so confidently, so seeing him become shy all of a sudden got a soft chuckle out of you.

“It’s fine,” you said. “I’m the one who should apologize for falling asleep when you should be home by now.”

“To be honest, you seemed like you really needed the rest,” he admitted sheepishly. Then his bashfulness faded as he held out his hand.

“I’m Chan.”

“I already read your name ta–” you stopped yourself. “I’m Y/N,” you corrected yourself, shaking his hand.

God, you were such a recluse. When was the last time you physically spoke to a human being?

Chan laughed, and suddenly you felt like less of an asshole again.

“Well, Y/N. I’m glad to have finally met you properly,” he said. Then he walked around the mess he made and behind the front counter, heading toward what seemed to be a supply closet, smiling at you as he said, “I’ve gotta clean that mess and close up. We’ll have to talk some more next time. You’re pretty funny.”

Your brain lit up at the possibility of there being a “next time.” You found him to be rather interesting himself. However, instead of admitting that you meekly nodded and let out a little “Yeah.”

He reached for the doorknob of the closet, but before doing so stopped and turned to you, just as you were about to check your phone for any messages you might’ve missed, his expression soft with worry.

“By the way,” he said, “I hope this doesn’t sound weird or anything, but… I hope you take care of yourself.”

You tilted your head curiously.

“I just mean,” he paused. “Try not to pass out again, okay? You’re always working really hard, so… just ask for help sometimes, you know? I don’t really know much about coding — though I have a friend who does — but anyway…”

“I’ll be careful,” you said, touched by his concern. “Thank you.”

“Of course,” he replied, smiling gently.

You said your goodbyes, packed your things, and left, even putting your phone in your backpack too. There was no need to think about coding, or the piling messages from your group project members to wile away the hours of your insomnia. Your mind was going to be dreaming about tonight’s events until sunrise, when you had to get up and do it all over again, except now you had something to look forward to in the evening.

#seventeen#svt fanfic#svt dino#dino x reader#lee chan#lee chan x reader#seventeen dino#fluff#seventeen fluff#seventeen fanfic#svt#seventeen ot13#svt ot13#seventeen x reader#dino x you#svt x reader#kpop#kpop fanfic#kpop fluff#a cozy bloom

57 notes

·

View notes

Text

In recent years, the realm of virtual reality (VR) has experienced a transformative evolution, establishing itself as a cornerstone of innovation across various industries. One of the trailblazers in this dynamic field is Simulanis, a VR development company based in India. With a focus on harnessing the power of immersive technology, Simulanis is not just creating virtual experiences; they are reshaping how businesses, education, and entertainment engage with audiences.

#VR Development India#Virtual Reality Solutions India#VR App Development India#Immersive VR Technology India#VR Game Development India#AR/VR Development Companies India#Virtual Reality Studios India#Custom VR Solutions India#Virtual Reality Content Creation India#VR Software Development India#VR Experience Design India#VR Simulation Development India#India VR Technology Providers#Virtual Reality for Businesses India#Enterprise VR Solutions India#VR Training Solutions India#Virtual Reality Development Companies India#Interactive VR Solutions India#VR for Education India#VR Hardware and Software Development India

1 note

·

View note